Why Generative AI in Games? (Four Reasons)

1) Generative AI increases the efficiency of game development. Reducing the time and resources necessary by automating the content creation which leads to lower costs for Textures, Meshes, Animations, Music, …. .

2) Generative AI can make games that are more challenging and engaging for players because every time the game is played it’s a different experience. More Fun and Engaging for Everyone.

3) With Natural Language Processing will Enable Anyone to Create Games. Sometimes, in a Game, it’s “just easier to say what you want”. Additionally, people with Disabilities can participate in Games and Game-like applications.

4) Voice Assistants help the Game to be easily understood and navigated because the Gamer can ask questions about Mechanics, Scoring and even Strategy. Access to more knowledge will enhance the Game Experience.

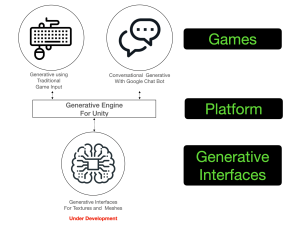

How does the Generative Builder for Unity work?

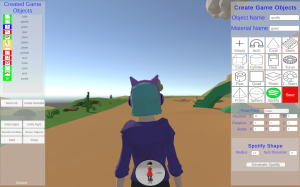

Generative Builder using Procedural Generation of 3D Game Objects on the Fly.

Dall-E 2 with Unity Builder for Unity (Image Generation)

<span style=”color: #888888;”>

Luma Labs AI with Unity Builder for Unity (Mesh Generation)

Generative Builder for Procedural Generative Games in the Future with AI

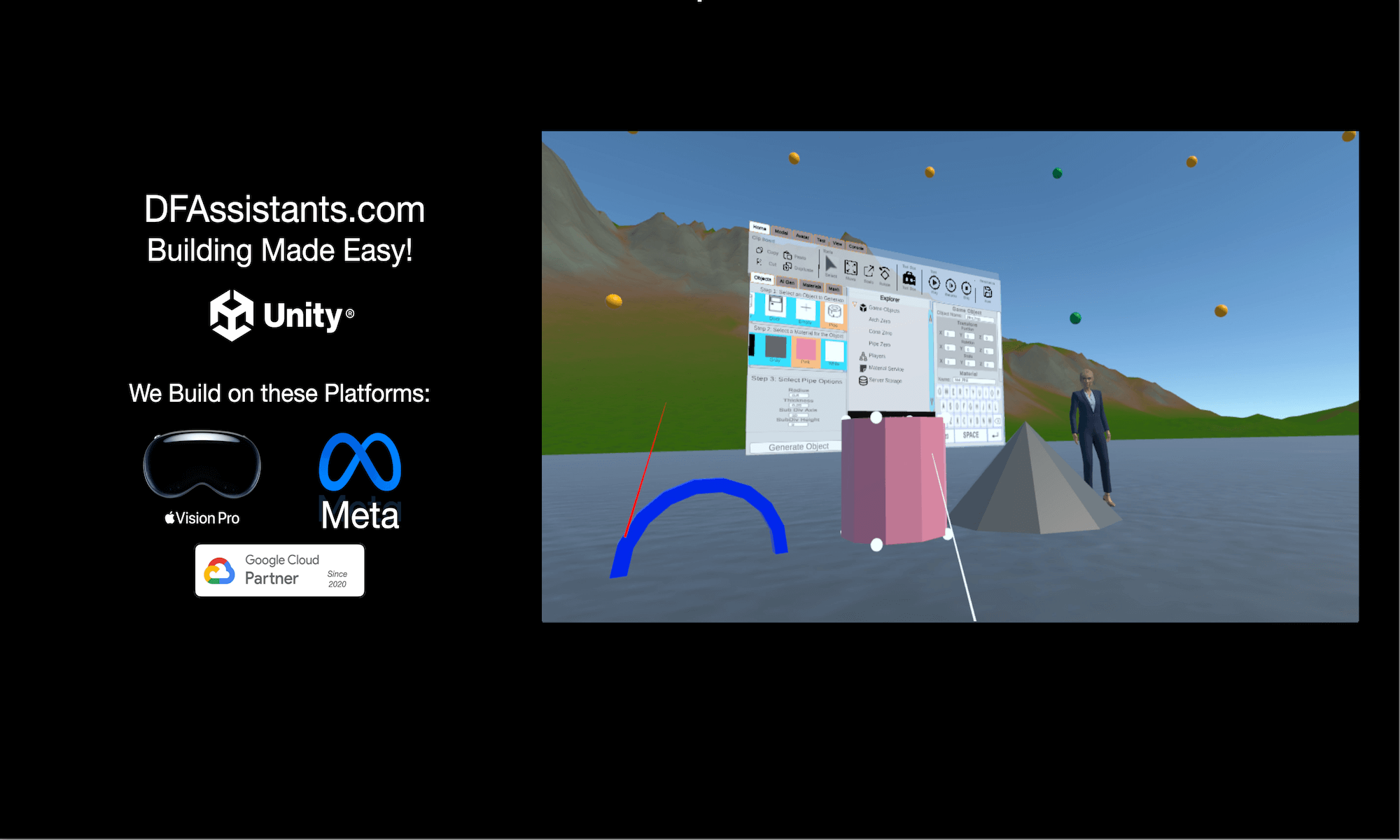

We Generate Unity Stuff on the Fly on any platform like AR, VR and WebGL. Resources are stored side, along with all configuration data.

Dialogflow Entities are a mechanism for identifying and extracting useful data from natural-language inputs. We use Session Entities to Dynamically represent Unity Stuff within a Google Chat Box Conversation.

We use Entities to Statically represent Unity Stuff within a Google Chat Box Conversation. Stuff that doesn’t change. Also Entities are used for Claire’s Conversation.

Intents are messaging objects that describe how to do something. We use Intents to map Your Voice onto Entities and Session Entities. For Example, you can say “Claire Paint Mushroom” and it will invoke the ClairePaint intent below. The ModelColor is a session entity created in Unity.

We interface with Generative AI Systems that Generate Images and Meshes. In this case, we gave Dall-E Text of “pirate” and it returned a Pirate Image/Material that we can use in the game.

Our Vision for the Generative Builder Starts Here.

Demonstration of Spotify in a Game

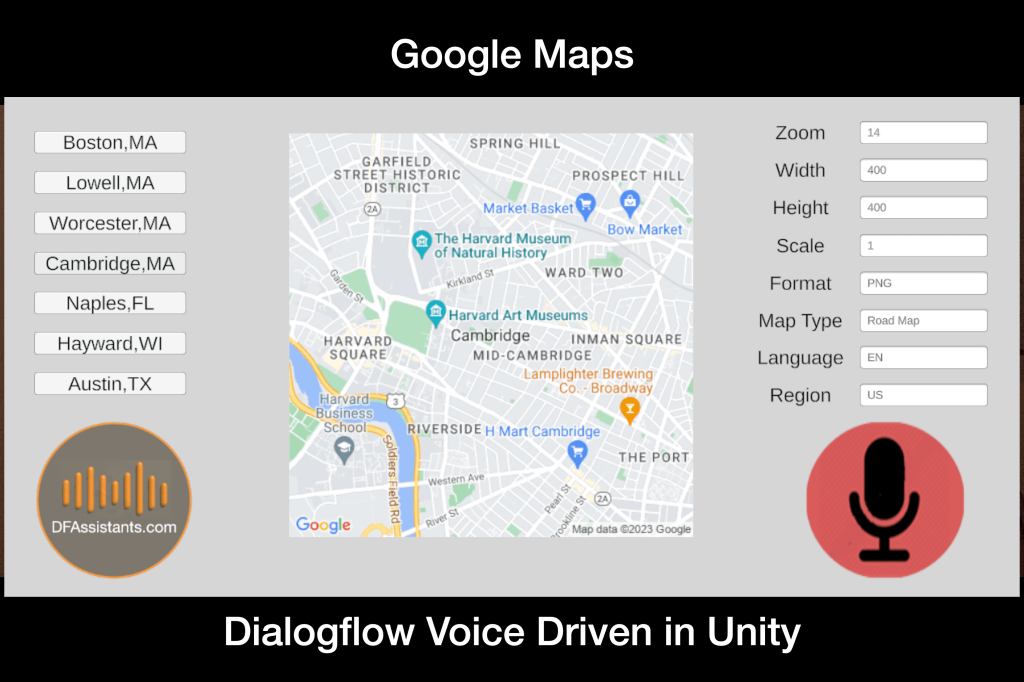

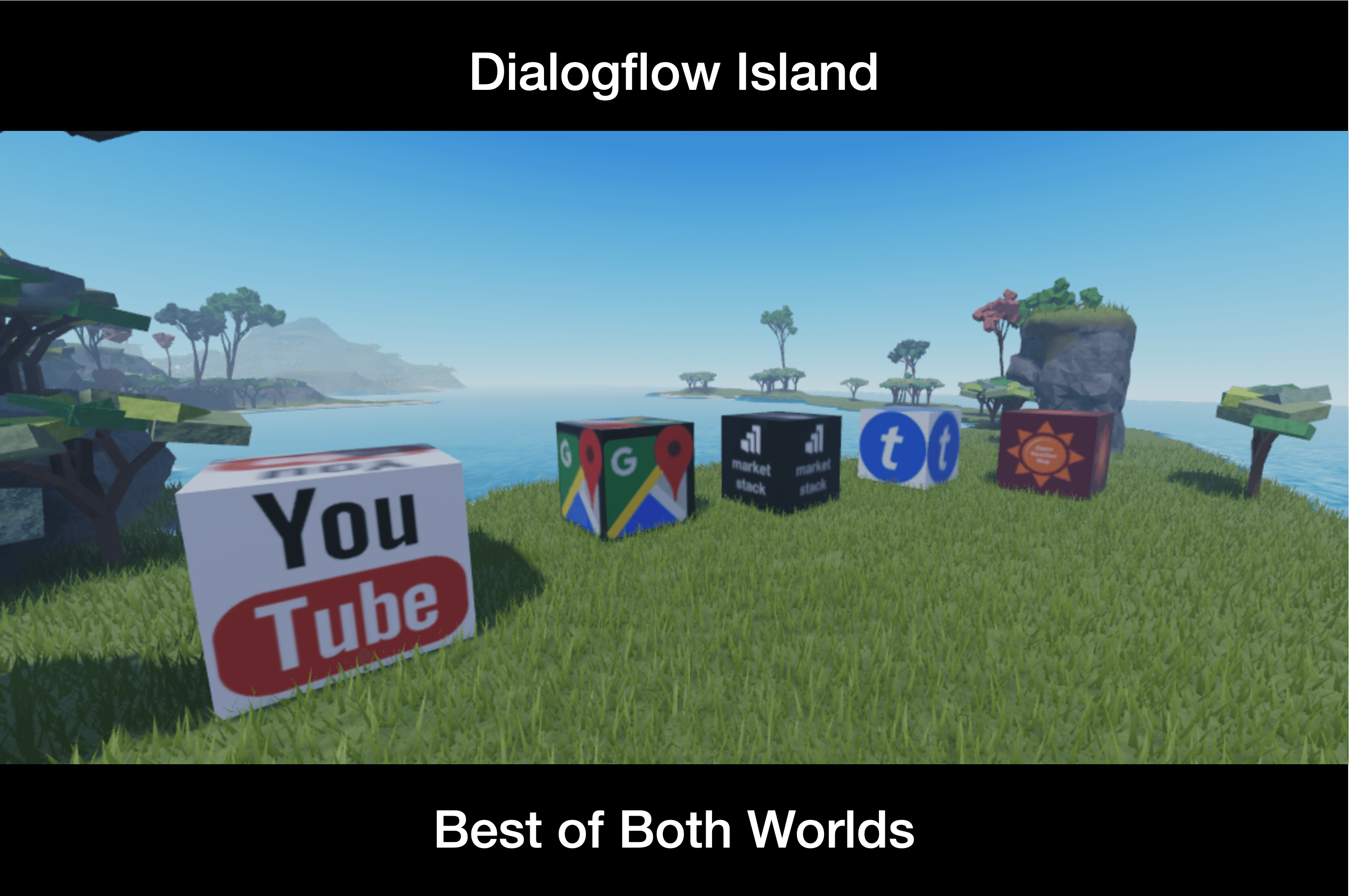

Spotify, YouTube, Ticketmaster, Google Maps and more apps Integrated with Dialogflow in a Dashboard

Spotify, YouTube, Ticketmaster, Google Maps and more apps Integrated with Dialogflow in a Meta Headset

Spotify, YouTube, Ticketmaster, Google Maps and more apps Integrated with Dialogflow in an AR Phone

Spotify, YouTube, Ticketmaster, Google Maps and more apps Integrated with Dialogflow for Web 2.0 (3.0*).

A Complete list of Voice Solutions from Easy to More Complicated

Speech to Text and Text to Speech

Dialogflow System is an Industrial Strength Natural Language Processing system that can easily perform Speech to Text and/or Text to Speech for any sort of Unity Application.

Unity’s User Interface Components

User Interface Components are the parts we use to build Unity apps. They add interactivity to a user interface, providing voice input points for the user as they navigate their way around; think buttons, scrollbars, menu items and checkboxes.

Unity’s Visual Scripting

Unity’s Visual scripting is a visual approach to manipulate objects and properties within Unity without writing code. The logic is built by connecting visual nodes together, empowering artists, designers, and programmers. Voice Input can now trigger Flow Graphs.

Unity’s Event System

We Extended Unity’s Event System to Send Events to objects in the application based on Voice input. The implementation is based on a publish and subscribe model.

Unity’s Game Object Location Services

We Extended Unity’s Game Object Location Services to use Multiple Session Entities so Game Objects can be referenced by just using your voice.

Unity’s Animator and Animations

We created a bridge to the Unity Animator and Animations such that voice input can trigger different Animations. Claire’s movement is completely driven by voice (.i.e Intent Responses) using Dialogflow.

Unity’s Platforms

We created a method for effectively building Dialogflow Gateway projects in different platforms with very few changes to the build configuration. This technology enables us to “Build and Run Anywhere”. The problems generally are found with the different Cameras and the different input devices (and sometimes shaders).

Unity’s Multi-Player Game Objects

Multi-Player Games make up almost 1/3 of all Games Played. We have extended Dialogflow NLP engine to enable voice to manipulate Multi-Player Game Objects. So if you use your voice to rotate a multi-player game object by 45 degrees – the cloned game objects running on other people’s computer will also rotate 45 degrees. So we have integrated Dialogflow with the Photon Unity Networking framework.

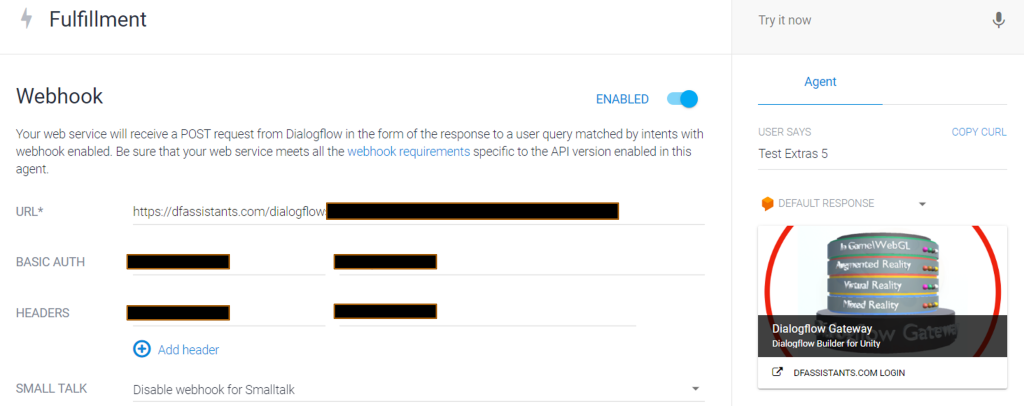

Unity’s Integration with Dialogflow Webhook

We use a Tomcat server as an integration point between Unity Networking and the Dialogflow Webhook. We use Session Id all the time. Part of the Session Id is Random but the other part represents a User’s identity. This provides us a User Context for personalization such as Assistant Type, Avatar usage, security settings, images, 3D Objects, files, etc which is SHARED from the Unity Platform and the Dialogflow Webhook. Personalization and Security are important Features.

Collaboration with a Combination of Integrations.

If we integrate #8, #7 and #5 from above we start to think about “Inter Reality” Collaboration. Where individuals using Different Head Sets need to work together. Furthermore, for the Enterprise, the problem becomes worse when you consider legacy of mobile apps and Web investment. The underlying problem is that all these systems have different input system – Keyboard, Touch Screen, Game Controller, MRTK, etc . The only common input system using a speaker and a mic – is Voice. Voice will be Ubiquitous on the Enterprise. (See Below).

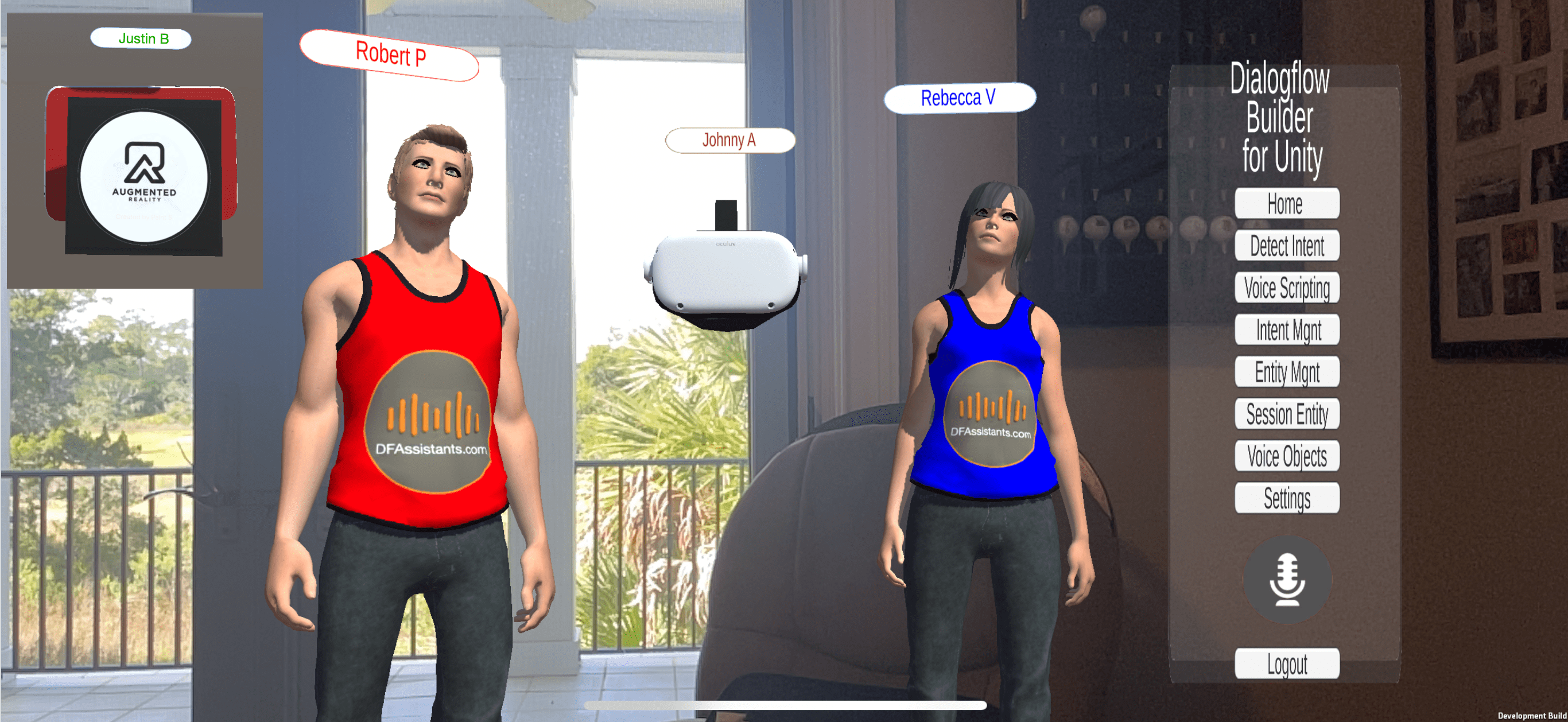

Voice Avatars using Dialogflow

Overview of Dialogflow Voice based Avatars Shown Above.

Above are four Voice driven Avatars collaborating. The Blue Shirted person (Rebecca V) is from the Browser world. The Red Shirted person (Robert V) is from a Unity Environment (Mac, Windows,..). The Meta headset person (Johnny A) is using a Meta Head Set while the Phone person (Justin B) is using a AR Mobile ARCore device to participate in the Collaboration. Their behavior is driven by the voice of the owning Avatar.

Below is a similar Scene but for an Augmented Reality Perspective

The View from Justin B’s Augment Reality Camera (See Inset).

Dialogflow Gateway Description

The Dialogflow Gateway directly interacts with Google’s Dialogflow System from the Unity Development environment. It is a complete implementation from complicated Intent and Entities Creation to Simple Detect Intent Texts. But this is only the starting point for integrating Dialogflow’s functionality with Unity’s rich set of SubSystems. Below is a list of Ten Integrations of Dialogflow with Unity.

Webhook For DFAssistants.com Login